Are You Ready? FDA's Transition From Computer System Validation To Computer Software Assurance

By Kathleen Warner, Ph.D., RCM Technologies

The FDA regulation 21 CFR Part 11 in 1997 and the related guidance of 2003 paved the road to implementation of computer system validation (CSV) by life sciences companies.

As pharmaceutical companies perfected their business processes and became more efficient in validating computer systems, the piles of documentation continued to grow without significant quality benefits. The focus was on speed, documentation accuracy and completeness, inspections, audits, and complying with the regulation.

In 2011 the Center for Devices and Radiological Health (CDRH) initiated the Case for Quality, a new program that identified barriers in the current validation of software in medical devices guidance (released in 2002). Now, CDRH — in cooperation with the Center for Biologics Evaluation and Research (CBER) and the Center for Drug Evaluation and Research (CDER) — is preparing to release new guidance, Computer Software Assurance for Manufacturing, Operations and Quality Systems Software, in late 2020.

This new guidance will provide guidelines for streamlining documentation with an emphasis on critical thinking, risk management, patient and product safety, data integrity, and quality assurance. Even though this guidance is being developed for the medical device industry, the FDA has indicated it should be considered when deploying non-product, manufacturing, operations, and quality system software solutions such as quality management systems (QMS), enterprise resource planning (ERP) systems, laboratory information management systems (LIMS), learning management systems (LMS), and electronic document management systems (eDMS). As such, the guidance will be applicable to research and development (R&D), clinical, laboratory, and other groups within pharmaceutical, biopharmaceutical, and medical device companies that are currently meeting the regulations for electronic records and electronic signatures (ERES)1 and computer system validation (CSV).2

As the digital world evolves, new technologies, including automation and artificial intelligence (AI), are hitting the marketplace and focusing on quality through unscripted and automated testing and smart applications. This paper describes how computer system validation (CSV) is performed today, introduces the concept of computer software assurance (CSA), and discusses how CSA can refocus validation on what’s important in the new world of digital technologies.

Computer System Validation is the process of achieving and maintaining compliance with the relevant GxP regulations defined by the predicate rule.3 Fitness for intended use is achieved by adopting principles, approaches, and life cycle activities. The validation methodology determines the framework to follow in developing the validation plans and reports and applying appropriate operational controls throughout the life cycle of the system.

CSV is typically performed following the standard operating procedures (SOPs) for the software development life cycle (SDLC) and the CSV, respectively. According to GAMP 5, the computerized system validation methodology comprises four distinct life cycle phases: Concept, Project, Operation, and Retirement.

- Concept Phase: During this phase, activities are initiated to justify project commencement.

- Project Phase: During this phase, the overall system is implemented and tested according to preapproved documents. The SDLC includes, but is not limited, to the following: validation methodology and plan, requirements, software documentation and test plans, traceability matrix, and summary reports.

- Operation Phase. During this phase, routine, day-to-day activities associated with the system (as defined in user manuals, SOPs, work instructions, etc.) are performed and include, but are not limited to, the following: backup and restore, disaster recovery, change management, incident/deviation management, access and security management, and periodic review.

- Retirement Phase. During this phase, the system is no longer used, needed, or operational and is at the end of its life cycle. A retirement/decommissioning plan is developed to document the approach and tasks to manage the data and records.

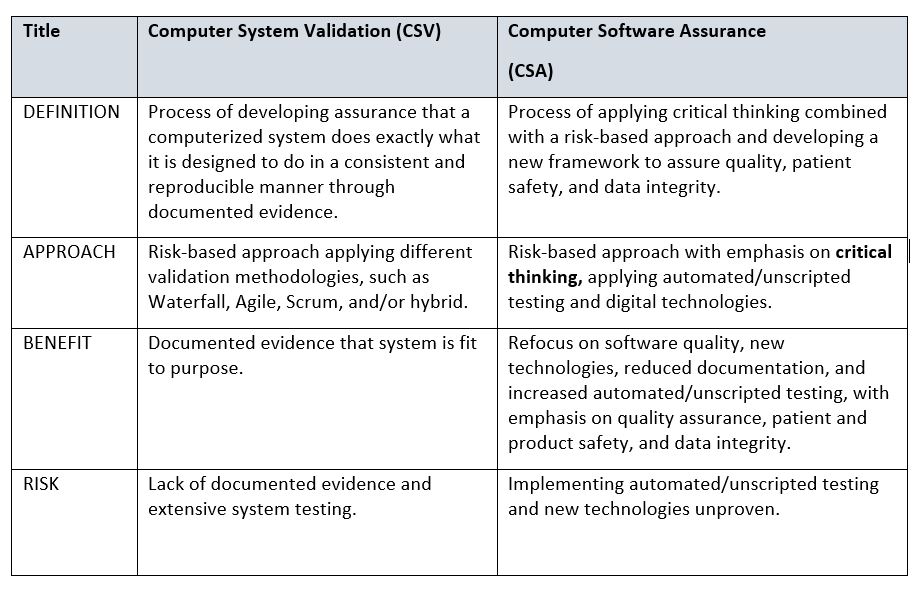

At a high level, the table below provides some differences between CSV and CSA. A more detailed comparison is recommended by your organization, with the goal of addressing change management and any associated costs involved in transitioning from CSV to CSA.

Table 1: Comparison of Computer System Validation (CSV) and Computer Software Assurance (CSA)

CSA turns things upside-down with a new approach that emphasizes critical thinking4 (why software developers are developing code rather than what they are developing) and assurance that risk is mitigated using a risk-based approach, automated and unscripted testing and documentation to achieve greater quality, patient and product safety, and data integrity. This new approach translates into doing more critical thinking and testing during the software development life cycle and placing less emphasis on documentation. However, it is important to note that the right level of documentation is still necessary to avoid the FDA’s comment:

“If it’s not documented, it didn’t happen.”

Benefits that can be realized include reduced development time, reduced costs, reduced documentation, and smarter software systems.

The CSV SDLC models used for developing software include, but are not limited to, the following:

- Waterfall - a model that uses a linear, sequential approach.

- Spiral - a model used for risk management that combines the iterative (i.e., desired result as the number of repetitions increases) development process model with elements of the Waterfall model.

- Agile - a model that refers to a group of software development methodologies (i.e., Scrum and Kanban) based on iterative development, where requirements and solutions evolve through collaboration between self-organizing cross-functional teams.

- Hybrid - a combination of two different models or systems to create a new and better model. Hybrid methodologies accept the fluidity of projects and allow for a more nimble and nuanced approach to the work. They can be applied to the full job or specific aspects of the project.

The new CSA model may look something like this – the interconnection of each circle representing the key elements of the approach. Existing CSV model and methodologies will need to be evaluated to determine whether they need to be retrofitted to align with the CSA guidance.

Where To Start

In order to best understand the nuances between CSV and CSA, you need to do some research and understand your current environment before you can transition to a new framework, methodology, or approach. By performing an assessment, you can better understand where you are spending your time: planning, designing, testing, documenting, etc. If you understand your current business and processes, resource utilization, gaps, and areas needing improvement, you can develop a transition plan that can focus on what’s important: quality assurance, patient and product safety, and data integrity. New streamlined business processes, critical thinking, and quality, efficiency, and effectiveness are the cornerstones for these areas of focus and should include quantitative and/or qualitative analysis of people, process, and technology and metrics (i.e., quantifiable measures of your business processes and performance) to measure your business operational performance.

You may ask what type of metrics should be included in your assessment and how can you gather data that aligns with these metrics. A good place to start is to define the categories for which you want to establish measurements, such as people, process, project, and product (includes technology). What do these categories have in common? They measure how well your organization is performing against specific criteria and provide you with data, positive or negative, on what is working and what needs more attention to improve operational performance.

Categories And Metrics

You can use the assessment process to learn more about your CSV performance by applying metrics for the following categories:

- People metrics – measure performance against objectives and goals, as well as measure the skill levels and abilities of team member.

- Process metrics – measure quality of performance, progress, process, or activity that can be analyzed and assessed. Qualitative or quantitative methods can be used to assess the operational processes and focus on what is working well.

- Product metrics – measure the specific criteria that will help research, manufacturing, and marketing evaluate the success of your product and product characteristics (i.e., quality, testing, number of deviations, etc.).

- Project metrics – measure key performance indicators (KPIs) related to project management and project control. Typically, they include quality of deliverables, schedule variance and performance, cost associated with missed deadlines, work-in-progress, cancellations, and customer satisfaction.

Next Steps

After collecting real data and metrics, you can better assess your organization’s performance. By identifying areas requiring improvement and areas of opportunity, you are now positioned to develop the transition plan to CSA.

- Develop a Transition Plan – Create, document, and execute your future road map that specifies roles, responsibilities, deliverables, and measured outcomes. Metrics, data collection, and risks should be established and addressed in this plan.

- Perform CSV Assessment – Customize your CSV assessment against the FDA’s future CSA guidance to ensure you are focused on what you can do differently to assure quality, patient and product safety, and data integrity.

- Develop and Train on CSA – Helping your team understand the importance of these concepts, existing and/or new, is critical to a successful transition:

- Critical thinking – what is critical thinking and how to do it?

- Risk-based approach – definition of risk and how to develop an approach to mitigate risk.

- CSA – refer to the upcoming FDA guidance and start learning what is changing and why.

- Validation methodology/framework – focus on added value, increased efficiencies and effectiveness, and improved quality assurance, patient and product safety, and data integrity.

- Revise Change Management Business Processes – Review what you have today and update/streamline your business processes to enable you to focus on what’s important: quality assurance, patient and product safety, and data integrity.

Conclusion

As the FDA prepares to release its new guidance on Computer Software Assurance for Manufacturing, Operations and Quality System Software later this year, life sciences companies need to be proactive and develop a strategy on how to transition their current CSV methodology and approach to a CSA methodology and approach that focuses on quality assurance, patient and product safety, and data integrity.

References:

- Code of Federal Regulations, Title 21, Food and Drugs, Part 11 Electronic Records; Electronic Signatures; Final Rule; Federal Register 62 (54), 13429-13466.

- ISPE GAMP 5 A Risk-Based Approach to Compliant GxP Computerized Systems © Copyright ISPE 2008. All rights reserved.

- Predicate Rule https://www.fda.gov/medical-devices/premarket-notification-510k/how-find-and-effectively-use-predicate-devices

- “Critical thinking is the intellectually disciplined process of actively and skillfully conceptualizing, applying, analyzing, synthesizing, and/or evaluating information gathered from, or generated by, observation, experience, reflection, reasoning, or communication, as a guide to belief and action.", www.google.com, Jul 1, 2019

About The Author:

Kathleen Warner, Ph.D., VP of Consulting Services for RCM Technologies, Life Sciences, is an executive consultant with 25+ years of experience in information technology (IT) and the life sciences. She has served as a chief information officer, subject matter expert, and domain expert in regulated environments. As a management consultant, Warner has provided oversight for hundreds of life science projects both in the U.S. and globally. Her strengths include leadership, advisory, organization change management, business process analyses, and program/project management engagements. As a practitioner and technologist, Warner has performed future cloud assessments to define the IT infrastructure roadmap, clinical sample management process analyses, R&D IT program management, and more.

Kathleen Warner, Ph.D., VP of Consulting Services for RCM Technologies, Life Sciences, is an executive consultant with 25+ years of experience in information technology (IT) and the life sciences. She has served as a chief information officer, subject matter expert, and domain expert in regulated environments. As a management consultant, Warner has provided oversight for hundreds of life science projects both in the U.S. and globally. Her strengths include leadership, advisory, organization change management, business process analyses, and program/project management engagements. As a practitioner and technologist, Warner has performed future cloud assessments to define the IT infrastructure roadmap, clinical sample management process analyses, R&D IT program management, and more.