Achieving Realism In Human Factors Work: How To Stay Out Of Fantasy Land

By Chad Uy, Design Science

In our last article, we discussed the key ingredients in a summative usability study. Here, we would like to focus more in-depth on a central issue related to usability studies — “representativeness.” One of the terms the FDA repeatedly uses in describing the requirements regarding usability studies is “representative” (cf., Applying Human Factors and Usability Engineering to Optimize Medical Device Design, 2011). We interpret this as a call to infuse every step of the device-development process with as much realism as possible.

What follows are some methods to achieve representativeness.

Use Real-World Observation To Drive Product Requirements

It is obvious to say that a full understanding of what users want and need leads to better, more suitable devices. One common strategy used to understand user wants and needs is to ask them what they want, for example, through surveys and/or focus groups. However, relying solely on what people say can result in an inaccurate picture of the problems they face.

People’s memories of previous events, and their predictions of how they will react to products or situations which they have not yet experienced, are notoriously fallible. What people say fails to match what they actually do, let alone what they actually will do in some hypothetical situation. Much of human conduct is largely unconscious. People also have complicated agendas that compromise accuracy when they answer questions. For example, people will skew information to paint themselves in a better light or leave out information that they think is unimportant to the researcher.

The antidote: Use real-world observation (contextual inquiry) with video documentation.

Contextual inquiries allow researchers to step into the user’s world. Not only does this approach provide an unfiltered, more accurate view of what actually is happening, it provides the opportunity to see beyond one’s preconceptions and to experience the world from a user’s perspective.

By being “on the spot,” one can ask questions that users can answer from perception rather than memory, while new questions can be asked as they emerge from ongoing observations. One can directly observe the factors that affect actions and decision-making, whether environmental or interpersonal, and pick up on details that the person being observed may not notice. Such firsthand experience helps researchers to understand what really is “representative,” as opposed to what is based on “lore” — the tales and tidbits that inevitably build up in any organization in the absence of actual good data.

Contextual inquiry provides a wide variety of information on users’ wants and needs, depending on how it is designed and where in the product development cycle it takes place. Contextual inquiries early in the product development cycle are open-ended in nature: they capture how users use products and processes currently available on the market in order to identify unmet needs and opportunities for improvement. Contextual inquiries done later in a product’s development cycle feature a prototype as the product-concept becomes more definitive. Through a task-structured session, one can explore how users engage with the prototype in the actual use-environment, while using a mixture of closed- and open-ended questions to identify advantages, constraints, and potential usability improvements.

Use real-world observations to inform the design-development process.

Create “High-Fidelity” Prototypes

Once ideas begin to emerge, of course, they have to be translated into some form of prototype to be tested with users. Paper prototyping and screen simulations are commonly employed options early in the development process. The difficulty with such “low-fidelity” approaches is that conclusions drawn from them may not apply to the actual product, because the fidelity itself alters performance. Since paper prototypes are not dynamic, they leave out the dynamic changes that may be at the heart of a device’s usability. Simulating a device on a PC may yield results that do not apply when the display is viewed on a small screen and the controls are an array of physical buttons, rather than a mouse.

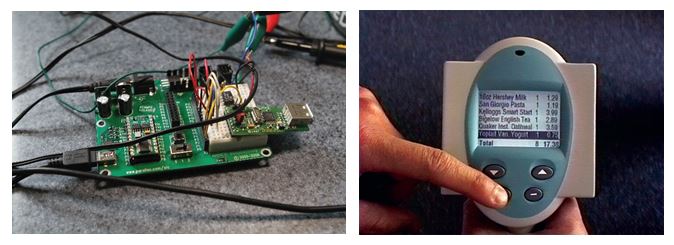

The antidote: Use micro-controllers and rapid-prototyping software to achieve early realism — real input devices and real displays.

The technology is available to make relatively realistic prototypes very early in the design process. Physical prototypes can be ‘cobbled together’ out of existing parts, using actual input devices and visual displays. Software can be simulated with increasingly sophisticated rapid-prototyping tools. The trick is to tether the hardware to the software in order to allow the inputs and outputs to mimic the intended form of the final device.

When considering outputs, remember that for audio outputs, speaker choice and sound file type can greatly affect the sound being produced. With visual outputs, resolution, depth of color, brightness, viewing angle, transitions, and animations in the software all are important. These factors can change how realistic a prototype feels.

Such an integrated approach also ensures that hardware and software are being considered together, rather than proceeding along separate development tracks that may cause integration problems later.

Early, realistic prototyping can produce more accurate data.

Incorporate More Realism Into Usability Testing

In usability testing, the environment and situations presented to participants are inextricably linked to the results that one obtains. Unrealistic environments, inevitable in testing labs, can lead to unrealistic performance. Performance in a testing lab may be fine, but that performance may decline when the same tasks are performed with bad lighting, loud background noise, and multiple distractions in the “real world.”

The antidote: Create more realistic conditions in our testing labs.

Setting up the room to simulate the intended use environment is the starting point. For example, if a product will be used in a clinical setting, props like gloves, alcohol swabs, and a patient bed can help to make the environment more realistic. If the product will be used in a home setting, a couch, house plants, and a TV would make the room feel more like a living room. Although such props might seemingly make little difference in testing, we have seen critical errors made because certain items, which normally would have been available in a real setting, were not present to properly cue the participants.

Likewise, if people tend to get phone calls, tend to their children, or watch television while they are using a device, leaving out such distractions gives a false picture of actual device performance. A particularly difficult problem is testing devices that are used in high stress situations, such as epi-pens. Performance in “cold blood” may not be at all predictive of actual performance.

Methods for increasing stress levels in the testing room include displaying a large timer, having multiple observers in the testing room, or blaring heavy metal music through headphones. All of these are real examples that have been used successfully.

Another important issue is the choice of which participants to include in usability testing. Quick tests sometimes are done with coworkers and colleagues, but these people likely are not representative of the actual users, and thus will potentially provide inaccurate data. Potential difficulties with handling a product designed for older users may not be discovered when testing younger users. And, of course, coworkers have more knowledge of the product or topic than your average user.

Finally, the test situation itself can alter a participant’s state of mind, adding stress for some, while allowing others to psychologically detach themselves because they know they are in a low-risk environment. To relax the participant, it generally is good to create a friendly, judgement-free environment by emphasizing that the device’s functionality, not their performance, is being tested. Taking breaks can be good, too.

Realistic testing room setups can put participants in the right state of mind.

Conclusion

Obtaining good usability data requires good representativeness. Contextual inquiry makes initial research more representative. Usability testing can be made more representative with good hardware-software integration in early prototypes, and with careful attention to the realism of participants, environments, and tasks in testing. The goal is to obtain data that actually is representative, and the importance of avoiding error along these lines is obvious.

About The Author

Chad Uy holds a B.S. in mechanical engineering from Tufts University and an M.S. in industrial engineering (concentration in human factors) from North Carolina State University. His expertise spans all dimensions of human factors research, from assessing client needs and designing study programs, to executing usability testing and developing regulatory submissions.