Best Practices To Use Virtual Reality Methodology For Medical Device Design & Development

By Sean Hägen, BlackHägen Design

In the research and design of medical devices, virtual reality (VR) technology has rapidly gained traction as an attractive alternative to in-person evaluation methods, which have been largely suspended during the pandemic. Technological advancements in gaming have significantly contributed to VR’s affordability and ease of use in development tools for a variety of industries. While VR has traditionally been a valuable application for training, it has become extremely beneficial upstream in early device development initiatives. This article will review how VR methodology can augment or even replace the configuration modeling that is designed to inform system architecture, especially in relation to user interfaces (UI).

Evaluation Methodologies

Using predicate methodology involves designing study models that enable the medical device design team to evaluate -- at a feature level -- disparate user interface configurations prior to system engineering, design for manufacturability, and industrial design. This approach is a complement to other system-level design inputs that impact requirements like manufacturability, service, sustainability, and power management by providing insights gained from the end user’s needs and context of use. More of a mock-up or prop, these study models are not iterations of a design direction under development. They are focused on providing a user with a way to experience a set of alternative user interface configurations and demonstrate their preferred features. Each alternative model is purposely configured to present UI features differently. For example, the overall UI is configured in a portrait or landscape orientation, handles are positioned at different attitudes, disposable sets are loaded from different approaches, etc. Study participants are thus able to isolate and determine preferred feature configurations and indicate why they are preferred in the context of use without committing to an overall design solution.

Engineering each study model in CAD provides the study participant with an appropriate level of interaction in order to experience the UI in a meaningful way. Features that open and close will need hinges engineered in a real-world scenario. These CAD models are not going through the extensive process of manufacturing design; it takes significant time to engineer such features in a non-VR world. Additionally, the models then have to be fabricated and debugged. All this engineering time and CAD data is effectively “throw away engineering,” because it is not yet representing the actual design direction. The development time for a study model can be drastically reduced by providing the study participant with a virtual means of evaluating the study models. Evaluation of virtual models eliminates the need to engineer all the functional details and the fabrication. Instead, functions are assigned kinematic characteristics. So, if a display is to tilt and swivel, the extent of those kinematics is defined without designing the actual mechanisms.

Contextual Environment Simulation

While there is an up-front investment in creating the VR environment of use, it is reusable. If you apply the fabricated methodology, the study would require a high-fidelity simulated operating room (OR) to be utilized for context. For example, in a VR study, if configurations for a surgical robot are to be evaluated and the contextual environment is an OR, all the people and equipment are critical for the realistic evaluation of the system. The OR environment should have surgical lights, monitors, patient table, booms, IV poles, anesthesia cart, infusion pumps, etc. This level of contextual realism enables the following parameters to be assessed:

- Footprint integration (relative to people and other equipment)

- Dimension priorities (height, width, and depth)

- Line of sight (relative to people and other equipment)

- Dynamic UI interferences (kinematics)

- Collisions with other equipment

- Access to user interfaces

- Component configuration

- User interface layout

- Maneuverability

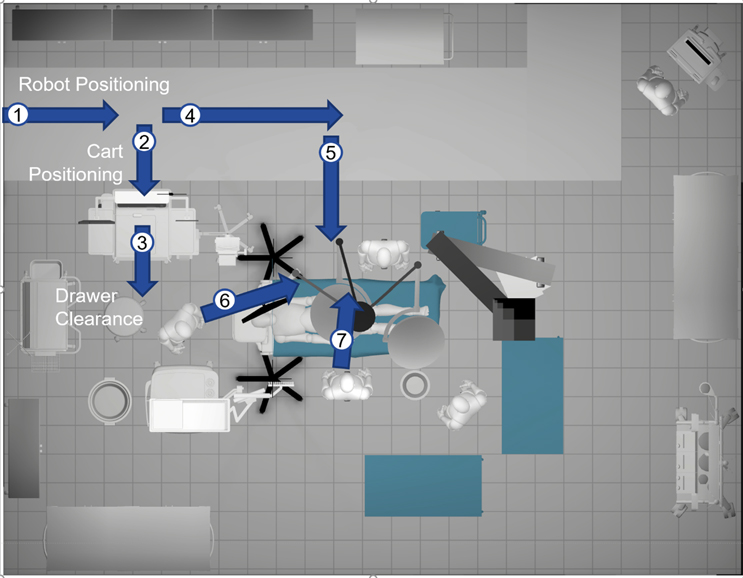

For the actual use-case scenario, a storyboard approach is often used to identify which actors need interactive features – or kinematics. A script supports the storyboard (Figure 1), which can be modified by the developer if the environment and actor kinematics need to be adjusted.

The script identifies to the developer which items will require kinematic features for the robot-assisted surgery scenario, such as medication cart, robot, lights, monitors, and patient table. Participants can “suit up” and try out the experience. In this scenario, the room used needs to be about the same size as you want the participant to explore. To support the user experience, a moderator’s study guide is helpful to provide prompts for participant input. Questions and discussion points should be listed as well as encouragement for the participant to “think aloud.” This means verbal responses to prompts from the moderator to execute tasks and then provide insights as to why one configuration is preferred over another. Questions might be something like, “is there a comfortable amount of space between the surgical team and the robot to exchange instruments?” or if the robot-assisted surgery has to convert to an open procedure, “can the team easily interact with the robot?”

There are a few parameters that a virtual study does not yet accommodate. These include portability (center of gravity, weight, balance), tactile experience (grip, comfort), Haptic feedback (draping, vibration, force feedback), and interaction with graphic user interfaces (touchscreen, keypad). However, evolving VR technology will enable viable interaction with the latter parameter. Also, VR technology combined with real-world props is augmented reality (AR), which will address these parameters by introducing surrogate objects that the VR makes appear as something else.

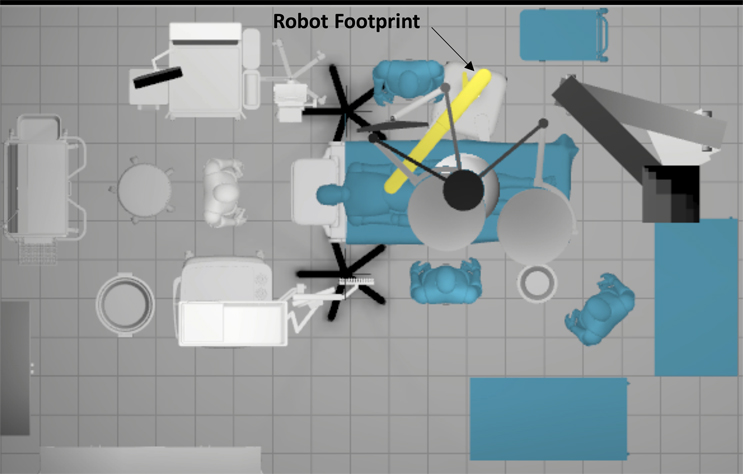

In the surgical robot development example, study participants, both potential users and designers, can experience how specific configurations and feature layouts are impacted in the context of use. The robot’s impact on the context of use can be created with a simple evaluation in CAD. The CAD is 3D but is still static and not experiential. For example, in Figure 2, it appears the robot appropriately integrates into the sterile field but does not accurately reflect the device’s kinematics and all the environmental context that could impact usability and clinical integration. Thus, an interactive evaluation of configuration models is critical for an accurate determination of the device’s viability in the OR setting.

Develop CAD system configurations before creating the VR configuration model. Represent all user interfaces and differences between models, such as handles, power button, emergency stop, break controls, power cord, data cable, etc. Differentiating features may include general architecture that can impact use case scenarios like positioning the robot to the OR table/patient, access to the patient and other equipment, and exchanging instruments. Variations in the general architecture might include the design of the robot base or the structure of the cantilevered arm or arms. The models should all be rather schematic in their design to avoid bias feature preference.

In Summary: VR Is A Proven Alternative

VR continues to prove itself as a viable approach in medical device design. Over the years, VR technology has greatly advanced and we expect it to continually evolve as more creative uses are developed for many industries. Application of this methodology is very effective in creating configuration alternatives, particularly in medical device design, as well as in generating inputs for foundational system design decisions. With the latest advancements in VR, it is possible to build much lighter CAD models, without engineering the mechanisms and without the need for fabrication. Using a VR version of a configuration model study can reduce development time and costs by enabling faster iterations, all key in the proliferation of new ideas for devices for medical professionals as well as in-home patient use.

About The Author:

Sean Hägen is founding principal and director of research & synthesis at BlackHägen Design. He has led design research and usability design, within both institutional and home environments, across 20 countries. His role focuses on the user research and synthesis phases of product development, including usability engineering, user-centric innovation techniques, and establishing user requirements. Hägen has a bachelor's degree in industrial design with a minor in human factors engineering from the Ohio State University. He is a member of IDSA (the Industrial Designs Society of America) and HFES (Human Factors and Ergonomics Society), having served two terms on the former’s Board of Directors.

Sean Hägen is founding principal and director of research & synthesis at BlackHägen Design. He has led design research and usability design, within both institutional and home environments, across 20 countries. His role focuses on the user research and synthesis phases of product development, including usability engineering, user-centric innovation techniques, and establishing user requirements. Hägen has a bachelor's degree in industrial design with a minor in human factors engineering from the Ohio State University. He is a member of IDSA (the Industrial Designs Society of America) and HFES (Human Factors and Ergonomics Society), having served two terms on the former’s Board of Directors.