Separating, Optimizing Device Evaluation And Design Verification A Key To Medtech Success

By Martin Coe

In medical device design and development, two critical development activities — evaluation of your device and design verification of your device — often are treated as one and the same, and this behavior is astonishingly detrimental to successful medical device development.

Although these activities are related and often share similar resources, their approaches, objectives, and prerequisites are criticially different. Understanding these differences as you plan and perform your comprehensive development process, especially evaluation and design verification, is crucial to both the device’s performance and its commercialization, in that each must be:

- Planned to identify resources, objectives, prerequisites, reviews, and follow-up actions

- Performed at the proper time during development (relative to other development activities), and only after critical prerequisites have been completed

- Performed with the proper level of rigor to ensure effectiveness

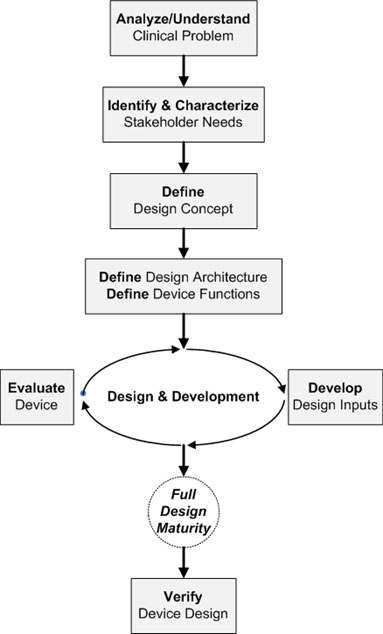

The flowchart below offers a simplified development view, identifying a critical sequence of tasks that form the foundation for both evaluation and design verification activities.

To begin, the development team must understand the clinical problem to be solved and how the device will solve it (intended use); this understanding leads to the characterization of stakeholders and elicitation of their needs (this is an arduous, yet imperative task) while a high-level design concept for the medical device begins to take shape.

At this point, two vitally important — yet commonly neglected — activities must occur:

- System architecting — One best architecture is developed via tradeoff analysis of key parameters (cost, risk, performance, needs, etc.)

- Functional analysis — Based on the device’s intended use and stakeholders needs, with the architecture and design concept provided, a complete list of functions the device must perform is defined

These activities lead to an iterative cycle of design and development where designs are developed, evaluated, and improved while design inputs are refined and baselined. This cycle is when device evaluation begins in earnest.

Evaluation Vs. Design Verification

The evaluation process, born from the scientific method, likely starts with an observation of design behavior, leading to formulation of a hypothesis about that behavior; an experiment is conducted to capture data, analyze it, and form a conclusion about that hypothesis.

The true value of evaluation in development is achieved when valid conclusions are drawn and acted upon, constantly improving device design (these two steps ensure a closed-loop process, but too often are neglected during evaluation). Evaluation must occur iteratively, at every level of design, until the team has full confidence that:

- The design performs all functions to their required level

- Adequate design margins are in place

- Design behavior is fully characterized

- Design interfaces are defined and characterized

- All design elements are successfully integrated

Design maturity is defined as the extent to which a design meets the full specification of its need. The above diagram reinforces that full design maturity cannot truly exist without effective evaluation, yet full design maturity must exist before design verification should occur. In other words, evaluation is essential to developing full design maturity, while design verification is not essential to developing full design maturity, but instead benefits from it — as evident in the final device design.

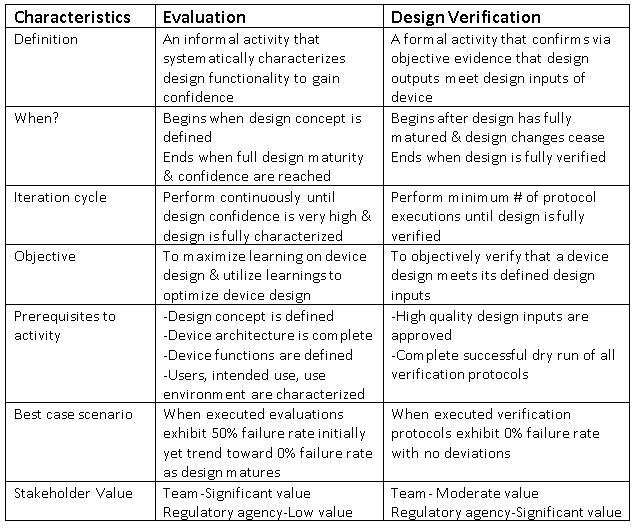

Conversely, design verification is performed to ensure device design inputs have been satisfied, but only after full design maturity has been achieved. Design input quality significantly affects design verification effectiveness, and should be developed only with robust systems engineering methods.

Design verification incorporates the systematic development (requiring formal review and approval), dry run execution, refinement, and formal execution of protocols written to verify design inputs until a 100-percent passing result is achieved. Verification failures and deviations must be investigated as needed — and resolved, forming a closed-loop process.

Because evaluation does not usually require formal written protocols, approvals, and report conclusions, it’s considered a more informal activity. Still, thoroughness in observation, data capture, and drawing conclusions from evaluation results is vital to achieving full design maturity.

Noteworthy differences between evaluation and design verification activities that can lead to problems are listed in the table and detailed below..

The objective of evaluation is to maximize learning about device design and utilize this learning to optimize the design, while the objective of design verification is simply to verify that a device design meets its defined design inputs via objective evidence. These dissimilar objectives lead to a crucial point.

A best-case scenario for design verification efforts would look like this: Executing all protocols once results in a zero-percent failure rate, with no deviations (this result is highly unlikely with even the simplest of medical devices and the most competent of development teams).

Conversely, the best-case scenario for evaluation would be: Executing all evaluations results in a 50-percent failure rate initially, but approaches a zero-percent failure rate as full design maturity draws near.

Why a 50-percent failure rate? Because true evaluation is about discovery, learning, and improvement, and these thrive when failure and success happen in equal proportions. True learning can only transpire when failure occurs, its root cause is discovered, and the resulting knowledge is applied to improving device design.

Conclusions

If design verification and evaluation are treated as one, major development failures are likely, including:

- Foundational development activities (stakeholder needs elicitation, design concepts, system architecting, functional analysis) will not occur, or will be severely undermined

- Team’s design decisions and actions will not truly be driven by their design inputs

- Full design maturity of your device will never be achieved

Any or all of these failures will significantly affect your device’s performance, leading to stakeholder dissatisfaction and eventual failure of your development project.

Medical device development along the flowcharted path promotes effective design and development, leading to effective evaluation and design verification activities — each planned, developed, and performed to meet its respective objective as part of an overall systems engineering approach to device development. Recognizing the differences in these activities leads to more effective development and results in better medical device performance, and that’s good for all stakeholders.

About The Author

Martin is the founder of Systems Engineering COE (Center of Excellence) and a Systems Engineering expert (INCOSE CSEP) with 23 years experience leading, optimizing, managing, and contributing to design & development of Class I/II/III medical devices of all types. He is a Systems Engineering mentor / trainer for technical professionals and speaker at MDM /10X medical device conferences. His work applying Systems Engineering and Six Sigma principles to medical device development has led to multiple professional awards and culminates with his book on Systems Engineering of Medical Devices, to be published in 2019.